In this post, we're going to explore OpenAI's new Advanced Voice Mode (AVM), focusing on its capabilities, limitations, and how it performs in Somali language based on our tests. I teamed up with Muhammad (@muhaksim) to put this new mode through its paces, and we came away with some interesting findings!

What is OpenAI's Advanced Voice Mode?

OpenAI's Advanced Voice Mode is a newly launched feature in ChatGPT designed to enhance the conversational experience through more natural and interactive voice communication. It's part of the latest innovations in natural language processing, designed to recognize and produce speech across multiple languages.

Key Aspects of AVM:

- Launch and Availability: Rolled out to ChatGPT Plus and Teams subscribers on September 24, 2024. Plans to extend to Enterprise and Edu customers soon, but currently unavailable in several regions, including the EU and UK.

- New Interface: Features a blue animated sphere instead of the previous black dots, making interactions more engaging.

- Voice Options: Introduces five new voices (Arbor, Maple, Sol, Spruce, and Vale), bringing the total to nine.

- Technology: Built on GPT-4's audio capabilities, allowing for real-time conversations that can pick up on non-verbal cues.

Key Features of AVM

- Natural Conversations: Capable of understanding speech speed and emotional tone, creating more dynamic interactions.

- Multilingual support: Effortlessly switches between languages like English and Somali.

- Real-time adaptation: Learns on the fly, adjusting pronunciations and improving based on feedback.

- Customization: Allows personalization through Custom Instructions and Memory features (though not fully integrated yet).

- Interactive learning: Adapts to specific pronunciations and learning patterns.

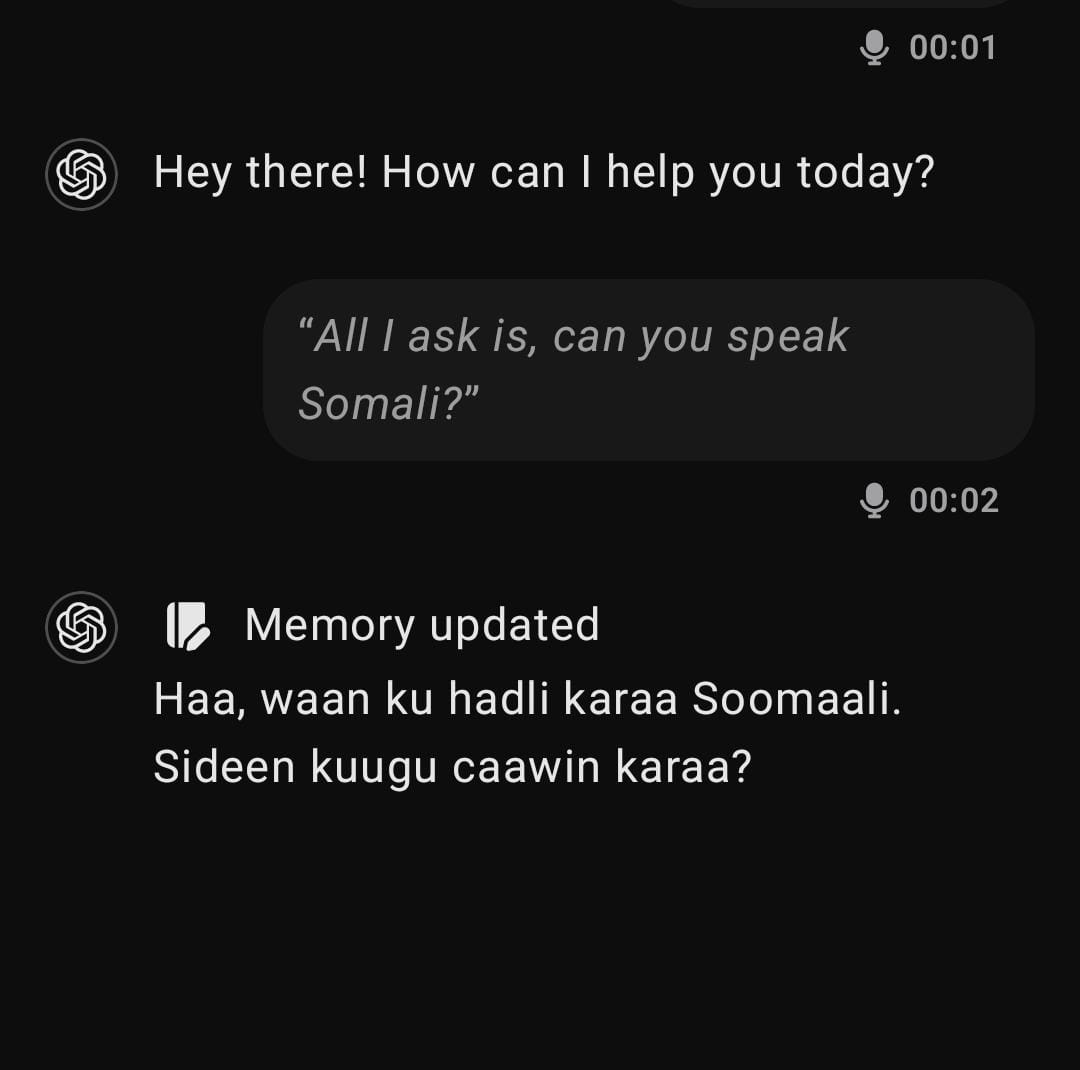

Screenshot 1:

Our Test: Performance in Somali

🌟 What We Liked

- Effortless Language Switching: Smooth transitions between Somali and English.

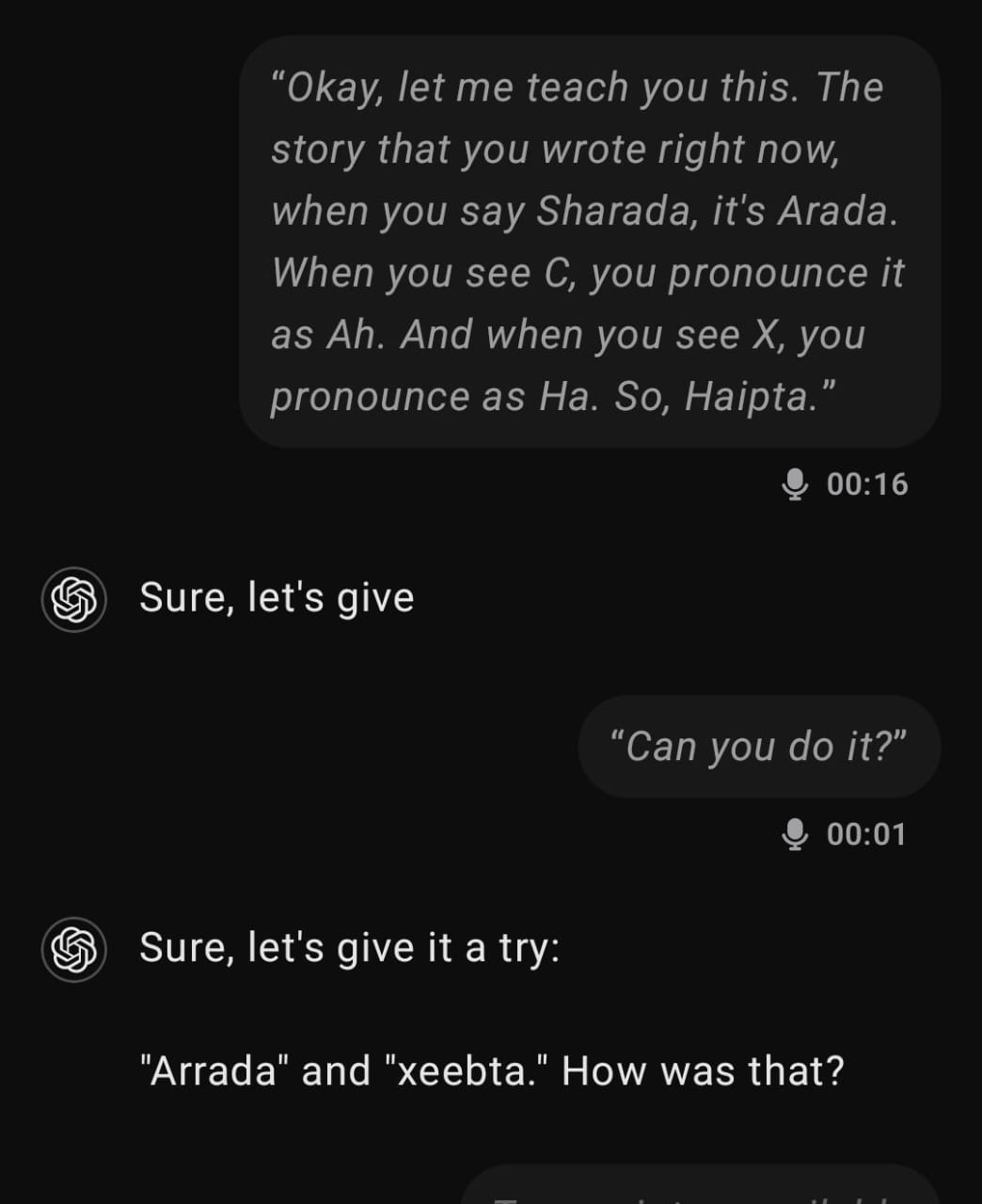

- Learning Capability: Quickly adapted to pronunciation corrections, like the Somali letter "C."

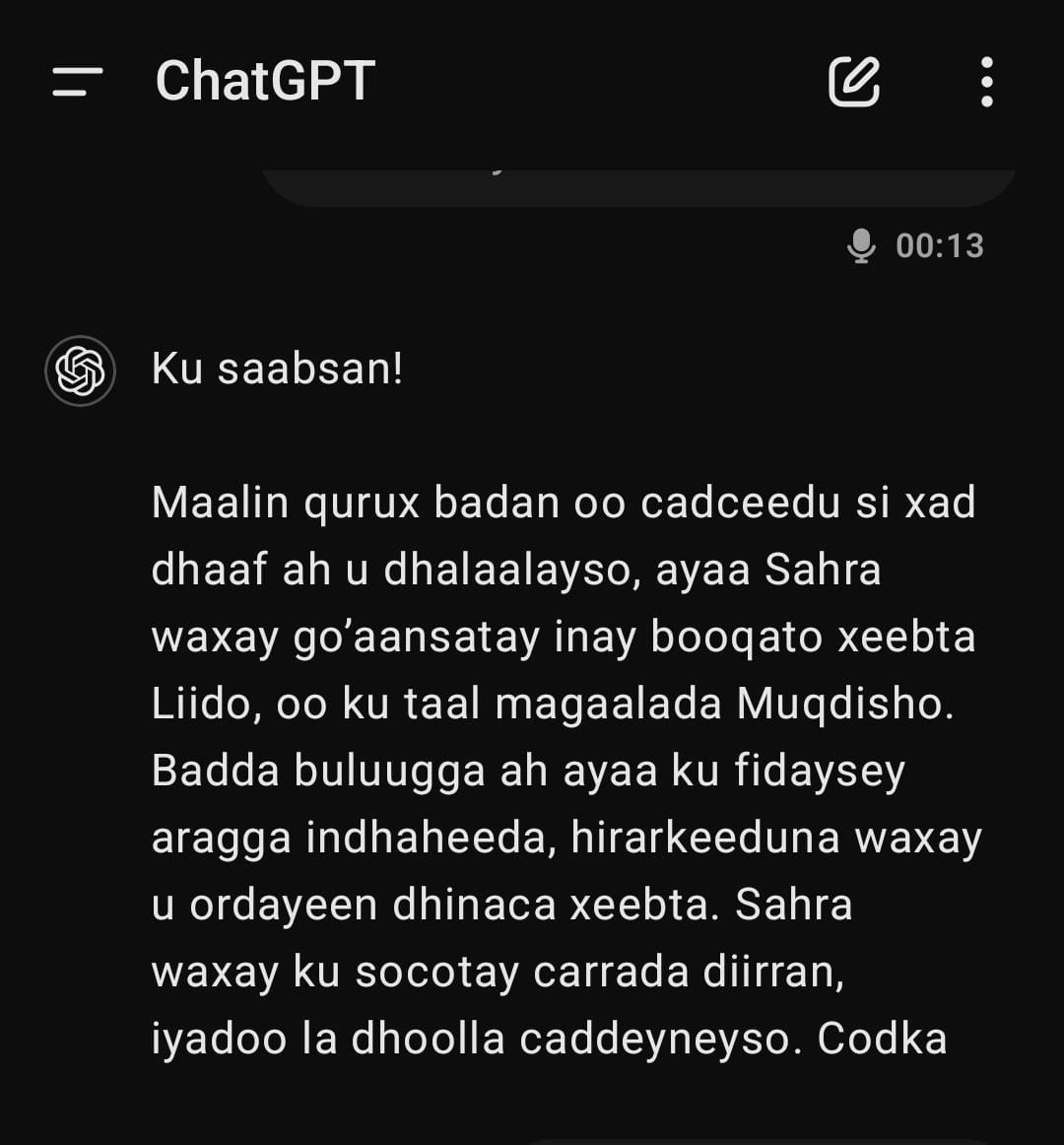

- Text Generation: Excellent GPT-4-powered text generation in Somali, with high fluency and coherence.

Screenshot 2:

🚩 Areas for Improvement

- Transcription Accuracy: Inconsistent at times, especially with random characters or words.

- Pronunciation Challenges:

- Mispronunciation of Somali letters like "X" and "C."

Improvements We Hope to See

- Enhanced Transcription: Especially for non-Western languages like Somali.

- Better Handling of Complex Phonetics: Improved pronunciation of language-specific sounds.

- Persistent Learning: Ability to retain learned pronunciations across multiple sessions.

Technical Insights and Limitations

- Usage Limits: Subscribers may face restrictions on daily voice interaction time.

- Regional Availability: Not yet available in all regions, notably absent in the EU and UK.

- Ongoing Improvements: OpenAI claims enhancements in accent understanding and conversation fluidity since alpha testing.

Final Thoughts

The Advanced Voice Mode represents a significant leap in making AI interactions more intuitive and engaging. While there are still challenges, particularly with languages like Somali, the progress is exciting. Future iterations will likely address current limitations, making it even more robust for diverse language applications.

Screenshot 3:

Special Thanks

A special thanks to Muhammed Khasim for his invaluable insights and feedback during our testing of OpenAI's Advanced Voice Mode.

Watch Our Test on YouTube

We recorded our test of AVM in action. Check out the full video here:

Remember, while AVM feels almost human-like in its interactions, it's important to note that it doesn't possess genuine emotions or consciousness. It's a sophisticated algorithm designed to interpret and respond to user inputs in a natural, conversational manner.